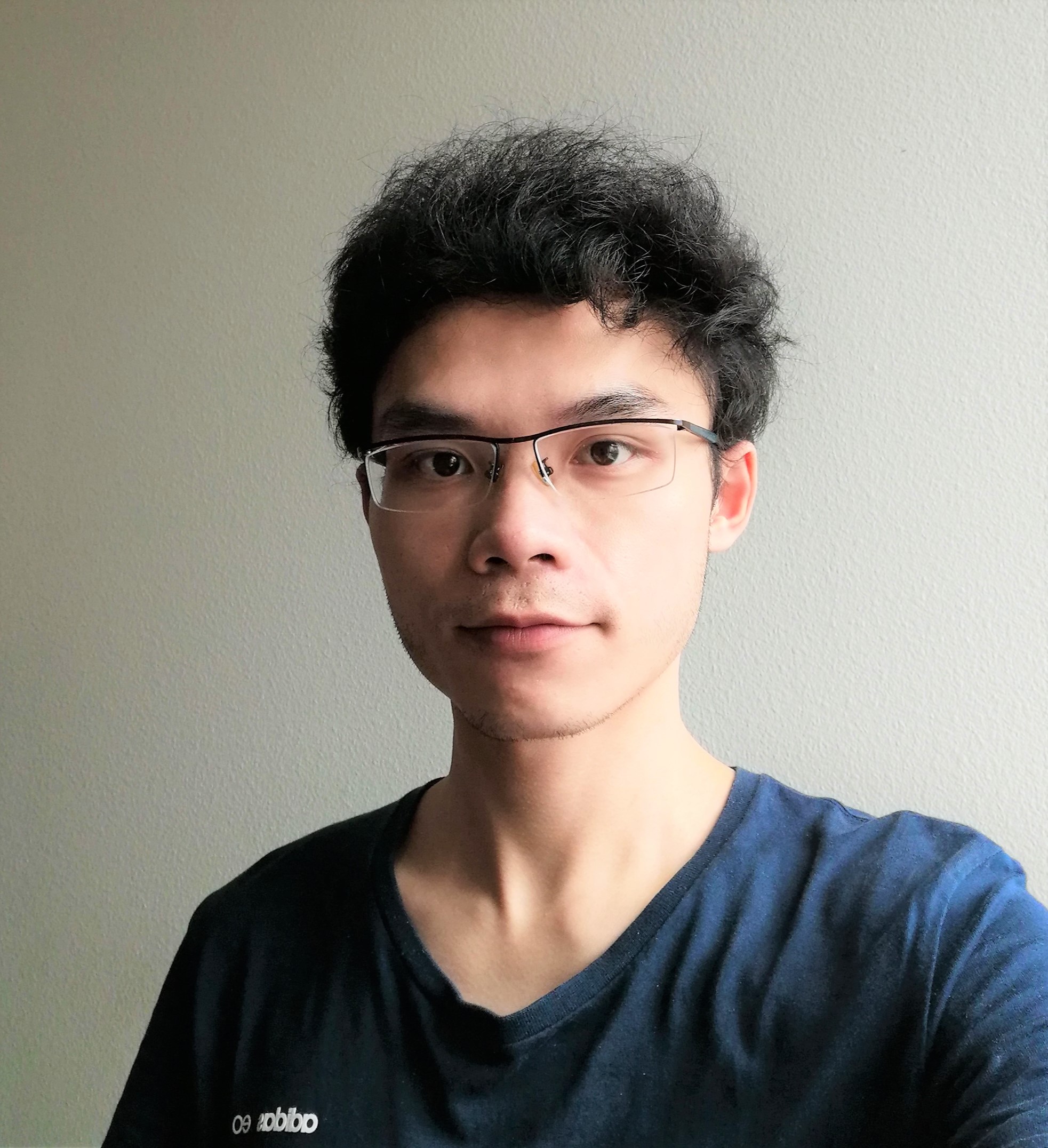

Xuhui Zhou

GHC 5705

4902 Forbes Ave

Pittsburgh, PA 15213

I am a PhD student at the Language Technologies Institute at CMU fortunately advised by Maarten Sap  . I am interested in socially intelligent human language technology. More specifically (yet still vague), I am interested in the following Qs:

. I am interested in socially intelligent human language technology. More specifically (yet still vague), I am interested in the following Qs:

- How do we define and build language technology that interacts with humans/society positively?

- How do we create better (socially) grounded language technology with commonsense?

- How does (social) language intelligence emerge through human language communication?